Matlab Based Facial Recognition

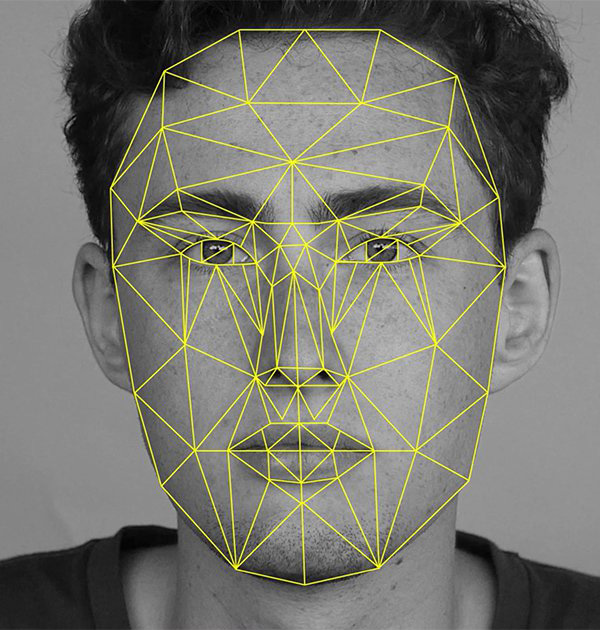

The concept of computers "seeing" such as it is has always fascinated me. Seeing faces, even in humans is a highly specialised process that happens in an equally specialised part of the brain in the Fusiform Gyrus. So much so that people see faces that aren't there such as in cars, tea leaves and even burnt toast. Using my newly learned matlab kung fu I decided to investigate how facial is handled by computers. I used edge detection and corner detection algorithms to create a feature map which was then fitted parametrically to an eye-nose-mouth model. A lot of the underlying methods were not readily tweakable but I read that it used a Nelder Mead simplex for the mapping the extracted features. I could use those aforementioned parameters to compare between two faces to calculate a probability that it is the same person. The accuracy of this was surprisingly high however it was particularly susceptible to differing head angles. Makes sense I guess.

I first did this on a small dataset of still images but it worked quite well on video. Considering the computation at my disposal only a few frames per second was sufficient to make an interesting demo. The inter-frame gap was low enough for a very simple tracking module under most conditions. Failures occured understandably with quick changes in pose or position, however I suspect that more sophisticated trackers could fix this. Even a more simple assignment could fill gaps in tracking in a pinch. I do suspect this could bring its own host of issues especially in "target rich" scenes.